Binary Classification#

Introduction#

In the realm of machine learning, binary classification stands as a fundamental task where models discern between two categories based on input data. This notebook explores the utilization of a pretrained backbone, specifically ResNet, to perform binary classification tasks efficiently. Pretrained models offer the advantage of leveraging previously learned features on large datasets, enabling quick adaptation to specific, smaller tasks with minimal training data.

ResNet, known for its deep architecture and residual learning framework, is particularly adept at capturing intricate patterns in image data, making it an excellent choice for tasks that require high levels of visual discrimination. This approach not only accelerates the model training process but also significantly improves performance, especially when training data is limited.

Furthermore, this notebook introduces an innovative alternative method: the cut-out technique. Unlike traditional sliding window techniques, which systematically select windows for analysis, the cut-out method strategically excises errors, providing training samples where defects are centered. This targeted approach ensures that the model consistently learns from the most critical features of the data, enhancing its ability to accurately classify new instances.

import json

import random

import os

import shutil

from sahi.slicing import slice_coco

from torch import nn

from torchvision import datasets, transforms, models

import matplotlib.pyplot as plt

import cv2

from PIL import Image

import glob

from sahi.slicing import slice_image

import torch

from torchvision import transforms

import numpy as np

from PIL import Image

from torch.utils.data import DataLoader

from tqdm import tqdm

Configurable variables#

# Dataset path

dataset_directory = "../dataset/binary_window/full_bg_fault"

validate_test_directory = "../dataset/binary_window/full_bg_fault"

classes = ('bg', 'faults')

model_directory = "final_models" #directory where models are saved

# Training configuration

size = 224

batch_size = 32

learning_rate = 0.001

epochs = 20

model_name = "resnet50"

#Enable CUDA for a faster training proces

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

Dataloaders#

The dataset loading process involves two key operations:

Transformations: The data is normalized and converted into Tensor format, which is required by the Convolutional Neural Network (CNN).

Handling Imbalanced Dataset: The imbalanced nature of the training dataset is addressed using the weighted random sampler.

These steps ensure that the data is in the correct format for the CNN and that the training process is not biased by class imbalances.

# Tensor transform

# Normalisation parameters

normalize = transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

transform = {

'train': transforms.Compose([

transforms.Resize((size, size)),

transforms.ToTensor(),

normalize,

]),

'validate': transforms.Compose([

transforms.Resize((size, size)),

transforms.ToTensor(),

normalize,

]),

'test': transforms.Compose([

transforms.Resize((size, size)),

transforms.ToTensor(),

normalize,

]),

}

# Datasets

training_dataset = datasets.ImageFolder(dataset_directory+"/train", transform=transform['train'])

validation_dataset = datasets.ImageFolder(validate_test_directory+"/validate", transform=transform['validate'])

test_dataset = datasets.ImageFolder(validate_test_directory+"/test", transform=transform['test'])

datasets_dict = {'train': training_dataset, 'valid': validation_dataset, 'test': test_dataset}

# Use torch.unique to get the count of images per class

class_labels, class_counts = torch.unique(torch.tensor(training_dataset.targets), return_counts=True)

# Calculate class weights for sampler

class_weights = 1. / class_counts.float()

# List with weight value per sample (same length as dataset)

sample_weights = class_weights[training_dataset.targets]

# Create a WeightedRandomSampler

sampler = torch.utils.data.WeightedRandomSampler(weights=sample_weights, num_samples=len(sample_weights), replacement=True)

train_loader = torch.utils.data.DataLoader(datasets_dict['train'], batch_size=batch_size, sampler=sampler)

valid_loader = torch.utils.data.DataLoader(datasets_dict['valid'], batch_size=batch_size, shuffle=True)

test_loader = torch.utils.data.DataLoader(datasets_dict['test'], batch_size=batch_size, shuffle=True)

dataloaders_dict = {'train': train_loader, 'valid': valid_loader, 'test': test_loader}

CNN training#

The CNN is trained with the ResNet50 pretrained backbone. All layers are finetuned.

def train_model(model, model_name, size):

#for param in model.parameters(): param.requires_grad = False # If feature extractor does not need to be updated

#Change last output layer to 2 classes

n_inputs = model.fc.in_features

last_layer = nn.Linear(n_inputs, len(classes))

model.fc = last_layer

# cuda

model = model.to(device)

print(model)

# Set the optimizer

optimizer = torch.optim.SGD(model.parameters(), lr=learning_rate)

# Set the loss function

criterion = nn.CrossEntropyLoss()

# cuda

criterion = criterion.to(device)

# Learning curves

results = {"training_acc": [], "training_loss": [], "validation_acc": [], "validation_loss": [], "training_recall": [], "validation_recall": [], "training_recall": [], "validation_recall": [], "training_precision": [], "validation_precision": []}

# Loop over all epochs

best_f2 = 0

best_precision = 0

best_recall = 0

best_accuracy = 0

best_model = []

for e in range(epochs):

# Debug

print('Epoch {}/{}'.format(e+1, epochs))

# Each epoch has a training and validation phase

for phase in ['train','valid']:

# Set the network state dependent on the phase

if phase == 'train':

model.train()

else:

model.eval()

# Reset the losses and corrects at the start of each epoch

running_loss = 0.0

running_corrects = 0.0

tp = 0 #true positive

fn = 0 #false negative

fp = 0 #false positive

# Extract batches from the data loaders untill all batches are retrieved

num_samples = 0

for inputs, labels in dataloaders_dict[phase]:

# Inputs shape

batch_size, c, h, w = inputs.shape

# Store the inputs and labels to the selected device (cuda)

inputs = inputs.to(device)

labels = labels.to(device)

# Forward pass through the network

outputs = model(inputs)

# Softmax transforms the output probabilities into one selected class

_, class_pred = torch.max(outputs, 1)

# Compute the loss of the batch

loss = criterion(outputs, labels)

# Update the losses and corrects for this batch

running_loss += loss.item() * batch_size

running_corrects += class_pred.eq(labels).sum().item()

# Calculate total samples

num_samples += batch_size

# recall & precision calculation

tp += ((class_pred == 1) & (labels == 1)).sum().item()

fn += ((class_pred == 0) & (labels == 1)).sum().item()

fp += ((class_pred == 1) & (labels == 0)).sum().item()

# If in training phase, adapt the model paramters to account for the losses

if phase=='train':

optimizer.zero_grad() # Pytorch keeps track of the gradients over multiple epochs, therefore initialise it to 0

loss.backward() # Calculate the weight gradients (Backward Propagation)

optimizer.step() # Update the model parameters using the defined optimizer

# Save results

recall = tp / (tp + fn) if (tp + fn) > 0 else 0

precision = tp / (tp + fp) if (tp + fp ) > 0 else 0

if phase == 'train':

results['training_acc'].append((running_corrects/num_samples)*100)

results['training_loss'].append(running_loss/num_samples)

results['training_recall'].append(recall)

results['training_precision'].append(precision)

print("train_acc %.3f train_loss %.3f train_recall %.3f train_precision %.3f" % (running_corrects/num_samples, running_loss/num_samples, recall, precision))

elif phase == 'valid':

results['validation_acc'].append((running_corrects/num_samples)*100)

results['validation_loss'].append(running_loss/num_samples)

results['validation_recall'].append(recall)

results['validation_precision'].append(precision)

print("val_acc %.3f val_loss %.3f val_recall %.3f val_precision %.3f" % (running_corrects/num_samples, running_loss/num_samples, recall, precision))

f2_score = 5*precision*recall/(4*precision + recall)

# Deep copy the model, the F2 score is used since false negatives are more costly

if phase=='valid' and f2_score > best_f2:

print("F2 score: "+str(f2_score))

print('-'*10)

best_f2 = f2_score

best_model = model

best_recall = recall

best_precision = precision

best_accuracy = running_corrects/num_samples

# Print

print('Best val f2: {:.4f}'.format(best_f2))

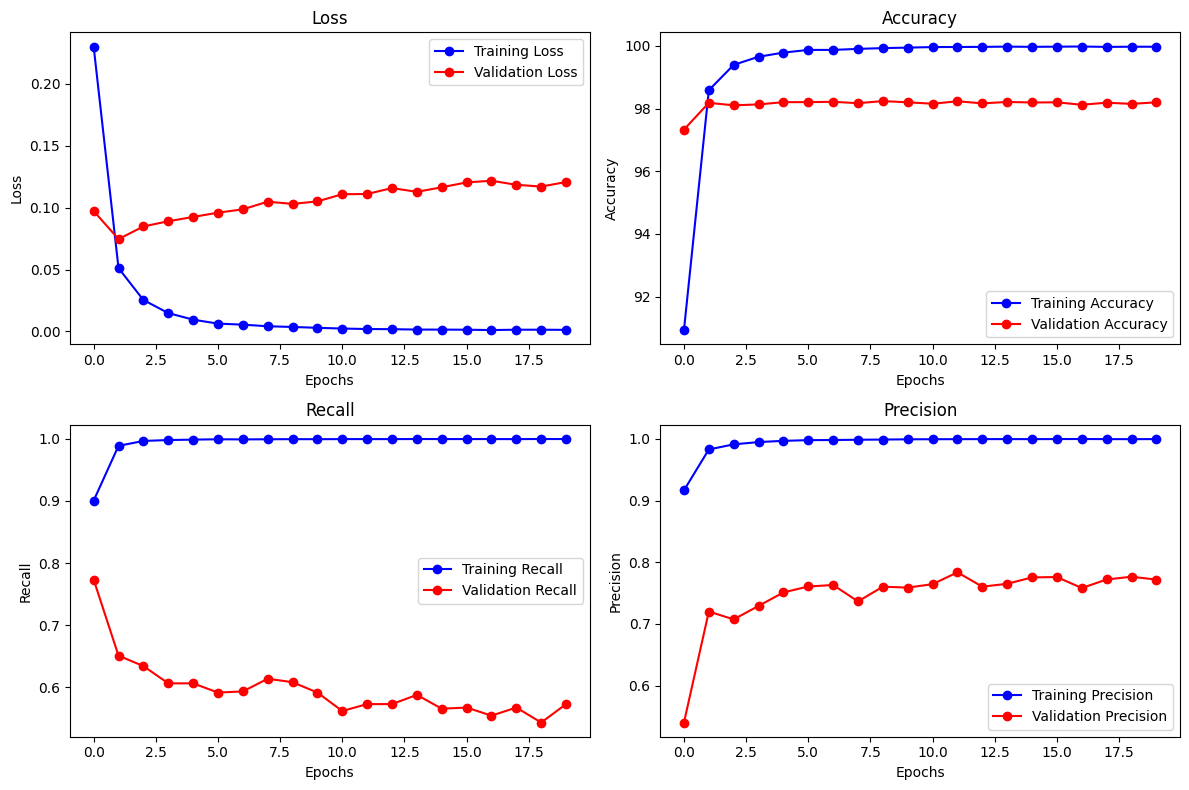

fig, axs = plt.subplots(2, 2, figsize=(12, 8))

# Plot training and validation loss

axs[0, 0].plot(range(len(results['training_loss'])), results['training_loss'], 'bo-', label='Training Loss')

axs[0, 0].plot(range(len(results['validation_loss'])), results['validation_loss'], 'ro-', label='Validation Loss')

axs[0, 0].set_title('Loss')

axs[0, 0].set_xlabel('Epochs')

axs[0, 0].set_ylabel('Loss')

axs[0, 0].legend()

# Plot training and validation accuracy

axs[0, 1].plot(range(len(results['training_acc'])), results['training_acc'], 'bo-', label='Training Accuracy')

axs[0, 1].plot(range(len(results['validation_acc'])), results['validation_acc'], 'ro-', label='Validation Accuracy')

axs[0, 1].set_title('Accuracy')

axs[0, 1].set_xlabel('Epochs')

axs[0, 1].set_ylabel('Accuracy')

axs[0, 1].legend()

# Plot training and validation recall

axs[1, 0].plot(range(len(results['training_recall'])), results['training_recall'], 'bo-', label='Training Recall')

axs[1, 0].plot(range(len(results['validation_recall'])), results['validation_recall'], 'ro-', label='Validation Recall')

axs[1, 0].set_title('Recall')

axs[1, 0].set_xlabel('Epochs')

axs[1, 0].set_ylabel('Recall')

axs[1, 0].legend()

# Plot training and validation precision

axs[1, 1].plot(range(len(results['training_precision'])), results['training_precision'], 'bo-', label='Training Precision')

axs[1, 1].plot(range(len(results['validation_precision'])), results['validation_precision'], 'ro-', label='Validation Precision')

axs[1, 1].set_title('Precision')

axs[1, 1].set_xlabel('Epochs')

axs[1, 1].set_ylabel('Precision')

axs[1, 1].legend()

plt.tight_layout()

plt.show()

return best_f2, best_accuracy, best_recall, best_precision, best_model,

fn_model = models.resnet50(pretrained=True)

print(f"Is CUDA supported by this system? {torch.cuda.is_available()}")

best_f2, best_accuracy, best_recall, best_precision, best_model = train_model(fn_model, model_name, size)

print(model_name)

print("Best F2 Score:", best_f2)

print("Best Accuracy:", best_accuracy)

print("Best Recall:", best_recall)

print("Best Precision:", best_precision)

# save model

model_path = model_directory+'/'+model_name+'.pth'

os.makedirs(model_directory, exist_ok=True)

torch.save(best_model.state_dict(), model_path)

c:\Users\admin\AppData\Local\Programs\Python\Python312\Lib\site-packages\torchvision\models\_utils.py:208: UserWarning: The parameter 'pretrained' is deprecated since 0.13 and may be removed in the future, please use 'weights' instead.

warnings.warn(

c:\Users\admin\AppData\Local\Programs\Python\Python312\Lib\site-packages\torchvision\models\_utils.py:223: UserWarning: Arguments other than a weight enum or `None` for 'weights' are deprecated since 0.13 and may be removed in the future. The current behavior is equivalent to passing `weights=ResNet50_Weights.IMAGENET1K_V1`. You can also use `weights=ResNet50_Weights.DEFAULT` to get the most up-to-date weights.

warnings.warn(msg)

Is CUDA supported by this system? True

ResNet(

(conv1): Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(maxpool): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)

(layer1): Sequential(

(0): Bottleneck(

(conv1): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): Bottleneck(

(conv1): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

(2): Bottleneck(

(conv1): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

)

(layer2): Sequential(

(0): Bottleneck(

(conv1): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): Conv2d(256, 512, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): Bottleneck(

(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

(2): Bottleneck(

(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

(3): Bottleneck(

(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

)

(layer3): Sequential(

(0): Bottleneck(

(conv1): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): Conv2d(512, 1024, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

(2): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

(3): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

(4): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

(5): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

)

(layer4): Sequential(

(0): Bottleneck(

(conv1): Conv2d(1024, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): Conv2d(1024, 2048, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): Bottleneck(

(conv1): Conv2d(2048, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

(2): Bottleneck(

(conv1): Conv2d(2048, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

)

(avgpool): AdaptiveAvgPool2d(output_size=(1, 1))

(fc): Linear(in_features=2048, out_features=2, bias=True)

)

Epoch 1/20

train_acc 0.909 train_loss 0.230 train_recall 0.901 train_precision 0.917

val_acc 0.973 val_loss 0.097 val_recall 0.773 val_precision 0.540

F2 score: 0.7118412046543465

----------

Epoch 2/20

train_acc 0.986 train_loss 0.051 train_recall 0.989 train_precision 0.983

val_acc 0.982 val_loss 0.075 val_recall 0.651 val_precision 0.720

Epoch 3/20

train_acc 0.994 train_loss 0.025 train_recall 0.997 train_precision 0.991

val_acc 0.981 val_loss 0.085 val_recall 0.634 val_precision 0.707

Epoch 4/20

train_acc 0.997 train_loss 0.015 train_recall 0.998 train_precision 0.995

val_acc 0.981 val_loss 0.089 val_recall 0.606 val_precision 0.729

Epoch 5/20

train_acc 0.998 train_loss 0.009 train_recall 0.999 train_precision 0.997

val_acc 0.982 val_loss 0.092 val_recall 0.606 val_precision 0.751

Epoch 6/20

train_acc 0.999 train_loss 0.006 train_recall 0.999 train_precision 0.998

val_acc 0.982 val_loss 0.096 val_recall 0.591 val_precision 0.761

Epoch 7/20

train_acc 0.999 train_loss 0.005 train_recall 0.999 train_precision 0.998

val_acc 0.982 val_loss 0.099 val_recall 0.593 val_precision 0.763

Epoch 8/20

train_acc 0.999 train_loss 0.004 train_recall 0.999 train_precision 0.999

val_acc 0.982 val_loss 0.105 val_recall 0.613 val_precision 0.737

Epoch 9/20

train_acc 0.999 train_loss 0.004 train_recall 1.000 train_precision 0.999

val_acc 0.982 val_loss 0.103 val_recall 0.608 val_precision 0.760

Epoch 10/20

train_acc 0.999 train_loss 0.003 train_recall 1.000 train_precision 0.999

val_acc 0.982 val_loss 0.105 val_recall 0.591 val_precision 0.759

Epoch 11/20

train_acc 1.000 train_loss 0.002 train_recall 1.000 train_precision 0.999

val_acc 0.982 val_loss 0.111 val_recall 0.561 val_precision 0.765

Epoch 12/20

train_acc 1.000 train_loss 0.002 train_recall 1.000 train_precision 1.000

val_acc 0.982 val_loss 0.111 val_recall 0.572 val_precision 0.784

Epoch 13/20

train_acc 1.000 train_loss 0.002 train_recall 1.000 train_precision 1.000

val_acc 0.982 val_loss 0.116 val_recall 0.572 val_precision 0.760

Epoch 14/20

train_acc 1.000 train_loss 0.001 train_recall 1.000 train_precision 1.000

val_acc 0.982 val_loss 0.113 val_recall 0.587 val_precision 0.765

Epoch 15/20

train_acc 1.000 train_loss 0.001 train_recall 1.000 train_precision 1.000

val_acc 0.982 val_loss 0.116 val_recall 0.565 val_precision 0.776

Epoch 16/20

train_acc 1.000 train_loss 0.001 train_recall 1.000 train_precision 1.000

val_acc 0.982 val_loss 0.120 val_recall 0.567 val_precision 0.776

Epoch 17/20

train_acc 1.000 train_loss 0.001 train_recall 1.000 train_precision 1.000

val_acc 0.981 val_loss 0.122 val_recall 0.554 val_precision 0.758

Epoch 18/20

train_acc 1.000 train_loss 0.001 train_recall 1.000 train_precision 1.000

val_acc 0.982 val_loss 0.118 val_recall 0.567 val_precision 0.772

Epoch 19/20

train_acc 1.000 train_loss 0.001 train_recall 1.000 train_precision 1.000

val_acc 0.981 val_loss 0.117 val_recall 0.543 val_precision 0.777

Epoch 20/20

train_acc 1.000 train_loss 0.001 train_recall 1.000 train_precision 1.000

val_acc 0.982 val_loss 0.121 val_recall 0.572 val_precision 0.772

Best val f2: 0.7118

resnet50_eu_eik

Best F2 Score: 0.7118412046543465

Best Accuracy: 0.9733019238319591

Best Recall: 0.7732342007434945

Best Precision: 0.5402597402597402

Test evaluation#

The model is applied in combination with a sliding window on the test dataset

# Normalisation parameters

normalize = transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

class ImageFolderWithPaths(datasets.ImageFolder):

"""Custom dataset that includes image file paths. Extends torchvision.datasets.ImageFolder"""

def __getitem__(self, index):

# Call the original __getitem__

original_tuple = super(ImageFolderWithPaths, self).__getitem__(index)

# Get the image path

path = self.imgs[index][0]

# Extract the filename from the path

filename = os.path.basename(path)

# Add the filename to the original tuple

tuple_with_filename = original_tuple + (filename,)

return tuple_with_filename

data_dir = validate_test_directory + "/test"

# Define transformations

transformed_dataset = ImageFolderWithPaths(data_dir, transform=transforms.Compose([

transforms.Resize((size, size)),

transforms.ToTensor(),

normalize,

]))

dataloader = DataLoader(transformed_dataset, batch_size=1, shuffle=False)

# get main coco file with all windows and corresponding errors

f = open('../dataset/sahi_output/test/sahi_coco.json')

data = json.load(f)

# category dict

cat_dict = {}

for i in data['categories']:

cat_dict[i['id']] = i['name']

# fault dict

fault_dict = {}

for i in data['annotations']:

fault_dict[i['image_id']] = cat_dict[i['category_id']]

# path dict

path_dict = {}

for i in data['images']:

if i['id'] in fault_dict:

path_dict[i['file_name']] = fault_dict[i['id']]

print(path_dict)

# create confusion matrix dict

confusion_matrix_dict = {}

for key, val in cat_dict.items():

confusion_matrix_dict[val] = {"guess_bg": 0, "guess_fault": 0}

confusion_matrix_dict[classes[0]] = {"guess_bg": 0, "guess_fault": 0}

def evaluate_model(model, test_loader, device):

# Ensure model is in evaluation mode

model.eval()

# Initialize metrics

running_corrects = 0

tp = 0 # true positives

fn = 0 # false negatives

fp = 0 # false positives

total_samples = 0

# No gradient is needed for evaluation

with torch.no_grad():

for inputs, labels, filename in dataloader:

inputs = inputs.to(device)

labels = labels.to(device)

# Forward pass

outputs = model(inputs)

_, preds = torch.max(outputs, 1)

# Update metrics

running_corrects += torch.sum(preds == labels).item()

tp += ((preds == 1) & (labels == 1)).sum().item()

fn += ((preds == 0) & (labels == 1)).sum().item()

fp += ((preds == 1) & (labels == 0)).sum().item()

total_samples += inputs.size(0)

# Confusion matrix update, 1 = positive = faulty

# input is background image

if labels == 0:

if preds == 1:

confusion_matrix_dict[classes[0]]["guess_fault"] += 1

else:

confusion_matrix_dict[classes[0]]["guess_bg"] += 1

# input is faulty image

else:

filename = filename[0]

if filename in path_dict:

window_fault = path_dict[filename]

if preds == 1:

confusion_matrix_dict[window_fault]["guess_fault"] += 1

else:

confusion_matrix_dict[window_fault]["guess_bg"] += 1

else:

print("error")

# Calculate metrics

accuracy = running_corrects / total_samples

recall = tp / (tp + fn) if (tp + fn) > 0 else 0

precision = tp / (tp + fp) if (tp + fp) > 0 else 0

return accuracy, recall, precision

# Assuming you have defined your test_loader and have the 'best_model' and 'device' from the previous training

accuracy, recall, precision = evaluate_model(best_model, dataloaders_dict['test'], device)

print(f"Test Accuracy: {accuracy:.4f}")

print(f"Test Recall: {recall:.4f}")

print(f"Test Precision: {precision:.4f}")

{'PO22-34546_9_1_annotated_11129_0_11577_448.png': 'open fout', 'PO22-34546_9_1_annotated_11168_0_11616_448.png': 'open fout', 'PO22-34546_9_1_annotated_11129_718_11577_1166.png': 'open fout', 'PO22-34546_9_1_annotated_11168_718_11616_1166.png': 'open fout', 'PO22-34549_7_1_annotated_0_0_448_448.png': 'open fout', 'PO22-34549_7_1_annotated_3590_0_4038_448.png': 'open fout', 'PO22-34549_7_1_annotated_3949_0_4397_448.png': 'open fout', 'PO22-34549_7_1_annotated_4308_0_4756_448.png': 'open fout', 'PO22-34549_7_1_annotated_4667_0_5115_448.png': 'open fout', 'PO22-34549_7_1_annotated_5026_0_5474_448.png': 'open fout', 'PO22-34549_7_1_annotated_5385_0_5833_448.png': 'open fout', 'PO22-34549_7_1_annotated_6103_0_6551_448.png': 'open fout', 'PO22-34549_7_1_annotated_6462_0_6910_448.png': 'open fout', 'PO22-34549_7_1_annotated_6821_0_7269_448.png': 'open fout', 'PO22-34549_7_1_annotated_7180_0_7628_448.png': 'open fout', 'PO22-34549_7_1_annotated_7539_0_7987_448.png': 'open fout', 'PO22-34549_7_1_annotated_7898_0_8346_448.png': 'open fout', 'PO22-34549_7_1_annotated_8257_0_8705_448.png': 'open fout', 'PO22-34549_7_1_annotated_8616_0_9064_448.png': 'open fout', 'PO22-34549_7_1_annotated_8975_0_9423_448.png': 'open fout', 'PO22-34565_25_1_annotated_9334_7441_9782_7889.png': 'open fout', 'PO22-34565_25_1_annotated_9693_7441_10141_7889.png': 'open fout', 'PO22-34565_25_1_annotated_10052_7441_10500_7889.png': 'open fout', 'PO22-34565_25_1_annotated_10352_7441_10800_7889.png': 'open fout', 'PO22-34570_4_2_annotated_10444_0_10892_448.png': 'open fout', 'PO22-34570_4_2_annotated_10444_359_10892_807.png': 'open fout', 'PO22-34570_4_2_annotated_10444_4308_10892_4756.png': 'open fout', 'PO22-34570_4_2_annotated_10444_4667_10892_5115.png': 'open fout', 'PO22-34570_4_2_annotated_10444_5026_10892_5474.png': 'open fout', 'PO22-34570_4_2_annotated_10444_5385_10892_5833.png': 'open fout', 'PO22-34570_4_2_annotated_10444_5744_10892_6192.png': 'open fout', 'PO22-34570_4_2_annotated_10411_6103_10859_6551.png': 'open fout', 'PO22-34570_4_2_annotated_10444_6103_10892_6551.png': 'open fout', 'PO22-34570_4_2_annotated_10411_6462_10859_6910.png': 'open fout', 'PO22-34570_4_2_annotated_10444_6462_10892_6910.png': 'open fout', 'PO22-34570_4_2_annotated_10411_6821_10859_7269.png': 'open fout', 'PO22-34570_4_2_annotated_10444_6821_10892_7269.png': 'open fout', 'PO22-34595_45_2_annotated_11488_0_11936_448.png': 'open fout', 'PO22-34595_45_2_annotated_11542_0_11990_448.png': 'open fout', 'PO22-34666_3_2_annotated_9238_0_9686_448.png': 'zaag', 'PO22-34666_3_2_annotated_9238_359_9686_807.png': 'zaag', 'PO22-34666_3_2_annotated_9238_718_9686_1166.png': 'zaag', 'PO22-34666_3_2_annotated_9238_1077_9686_1525.png': 'zaag', 'PO22-34666_3_2_annotated_9238_1436_9686_1884.png': 'zaag', 'PO22-34666_3_2_annotated_9238_1795_9686_2243.png': 'zaag', 'PO22-34666_3_2_annotated_9238_2154_9686_2602.png': 'zaag', 'PO22-34666_3_2_annotated_9238_2513_9686_2961.png': 'zaag', 'PO22-34666_3_2_annotated_9238_2872_9686_3320.png': 'zaag', 'PO22-34666_3_2_annotated_9238_3231_9686_3679.png': 'zaag', 'PO22-34666_3_2_annotated_9238_3590_9686_4038.png': 'zaag', 'PO22-34913_39_2_annotated_0_1436_448_1884.png': 'ongekend', 'PO22-34913_39_2_annotated_0_1795_448_2243.png': 'ongekend', 'PO22-34913_39_2_annotated_7180_1795_7628_2243.png': 'open fout', 'PO22-34913_39_2_annotated_7539_1795_7987_2243.png': 'open fout', 'PO22-34913_39_2_annotated_7898_1795_8346_2243.png': 'open fout', 'PO22-34913_39_2_annotated_8257_1795_8705_2243.png': 'open fout', 'PO22-34913_39_2_annotated_8548_1795_8996_2243.png': 'open fout', 'PO22-35136_63_2_annotated_2513_2513_2961_2961.png': 'open fout', 'PO22-35136_63_2_annotated_2872_2513_3320_2961.png': 'open fout', 'PO22-35136_63_2_annotated_3231_2513_3679_2961.png': 'open fout', 'PO22-35136_63_2_annotated_3590_2513_4038_2961.png': 'open fout', 'PO22-35136_63_2_annotated_3949_2513_4397_2961.png': 'open fout', 'PO22-35136_63_2_annotated_4308_2513_4756_2961.png': 'open fout', 'PO22-35136_63_2_annotated_4667_2513_5115_2961.png': 'open fout', 'PO22-35136_63_2_annotated_5026_2513_5474_2961.png': 'open fout', 'PO22-35136_63_2_annotated_5385_2513_5833_2961.png': 'open fout', 'PO22-35136_63_2_annotated_5744_2513_6192_2961.png': 'open fout', 'PO22-35136_63_2_annotated_6103_2513_6551_2961.png': 'open fout', 'PO22-35136_63_2_annotated_6462_2513_6910_2961.png': 'open fout', 'PO22-35136_63_2_annotated_6821_2513_7269_2961.png': 'open fout', 'PO22-35136_63_2_annotated_7180_2513_7628_2961.png': 'open fout', 'PO22-35136_63_2_annotated_7539_2513_7987_2961.png': 'open fout', 'PO22-35136_63_2_annotated_7898_2513_8346_2961.png': 'open fout', 'PO22-35136_63_2_annotated_8257_2513_8705_2961.png': 'open fout', 'PO22-35328_28_1_annotated_9690_0_10138_448.png': 'zaag', 'PO22-35328_28_1_annotated_9690_359_10138_807.png': 'zaag', 'PO22-35328_28_1_annotated_9690_718_10138_1166.png': 'zaag', 'PO22-35328_28_1_annotated_9690_1077_10138_1525.png': 'zaag', 'PO22-35328_28_1_annotated_9690_1436_10138_1884.png': 'zaag', 'PO22-35328_28_1_annotated_9690_1795_10138_2243.png': 'zaag', 'PO22-35328_28_1_annotated_9690_2154_10138_2602.png': 'zaag', 'PO22-35328_28_1_annotated_9690_2513_10138_2961.png': 'zaag', 'PO22-35328_28_1_annotated_9690_2872_10138_3320.png': 'zaag', 'PO22-35328_28_1_annotated_9690_3231_10138_3679.png': 'zaag', 'PO22-35328_28_1_annotated_9690_3590_10138_4038.png': 'zaag', 'PO22-35474_25_2_annotated_15431_0_15879_448.png': 'open fout', 'PO22-35474_25_2_annotated_15431_1077_15879_1525.png': 'open fout', 'PO22-35474_25_2_annotated_15431_1436_15879_1884.png': 'open fout', 'PO22-35474_25_2_annotated_15431_2513_15879_2961.png': 'open fout', 'PO22-35474_25_2_annotated_15431_2872_15879_3320.png': 'open fout', 'PO22-35474_25_2_annotated_15431_3590_15879_4038.png': 'open fout', 'PO23-00458_1_1_annotated_9685_0_10133_448.png': 'zaag', 'PO23-00458_1_1_annotated_9685_359_10133_807.png': 'zaag', 'PO23-00458_1_1_annotated_9685_718_10133_1166.png': 'zaag', 'PO23-00458_1_1_annotated_9685_1077_10133_1525.png': 'zaag', 'PO23-00458_1_1_annotated_9685_1436_10133_1884.png': 'zaag', 'PO23-00458_1_1_annotated_9685_1795_10133_2243.png': 'zaag', 'PO23-00458_1_1_annotated_9685_2154_10133_2602.png': 'zaag', 'PO23-00458_1_1_annotated_9685_2513_10133_2961.png': 'zaag', 'PO23-00458_1_1_annotated_9685_2872_10133_3320.png': 'zaag', 'PO23-00458_1_1_annotated_9685_3231_10133_3679.png': 'zaag', 'PO23-00458_1_1_annotated_9685_3590_10133_4038.png': 'zaag', 'PO23-00458_1_1_annotated_9685_3949_10133_4397.png': 'zaag', 'PO23-00458_1_1_annotated_0_4082_448_4530.png': 'zaag', 'PO23-00458_1_1_annotated_359_4082_807_4530.png': 'zaag', 'PO23-00458_1_1_annotated_718_4082_1166_4530.png': 'zaag', 'PO23-00458_1_1_annotated_1077_4082_1525_4530.png': 'zaag', 'PO23-00458_1_1_annotated_1436_4082_1884_4530.png': 'zaag', 'PO23-00458_1_1_annotated_1795_4082_2243_4530.png': 'zaag', 'PO23-00458_1_1_annotated_9685_4082_10133_4530.png': 'zaag', 'PO23-00459_69_1_annotated_11488_3949_11936_4397.png': 'zaag', 'PO23-00459_69_1_annotated_11847_3949_12295_4397.png': 'zaag', 'PO23-00459_69_1_annotated_12184_3949_12632_4397.png': 'zaag', 'PO23-00459_69_1_annotated_11488_3967_11936_4415.png': 'zaag', 'PO23-00459_69_1_annotated_11847_3967_12295_4415.png': 'zaag', 'PO23-00459_69_1_annotated_12184_3967_12632_4415.png': 'zaag', 'PO23-00610_53_1_annotated_1795_4277_2243_4725.png': 'open voeg', 'PO23-00610_53_1_annotated_2154_4277_2602_4725.png': 'open voeg', 'PO23-00610_53_1_annotated_2513_4277_2961_4725.png': 'open voeg', 'PO23-00610_53_1_annotated_2872_4277_3320_4725.png': 'open voeg', 'PO23-00610_53_1_annotated_3231_4277_3679_4725.png': 'open voeg', 'PO23-00610_53_1_annotated_3590_4277_4038_4725.png': 'open voeg', 'PO23-00610_53_1_annotated_3949_4277_4397_4725.png': 'open voeg', 'PO23-00610_53_1_annotated_4308_4277_4756_4725.png': 'open voeg', 'PO23-00610_53_1_annotated_4667_4277_5115_4725.png': 'open voeg', 'PO23-00610_53_1_annotated_5026_4277_5474_4725.png': 'open voeg', 'PO23-00610_53_1_annotated_5385_4277_5833_4725.png': 'open voeg', 'PO23-00610_53_1_annotated_5744_4277_6192_4725.png': 'open voeg', 'PO23-00610_53_1_annotated_6103_4277_6551_4725.png': 'open voeg', 'PO23-00610_53_1_annotated_6462_4277_6910_4725.png': 'open voeg', 'PO23-00923_155_2_annotated_6821_718_7269_1166.png': 'kras', 'PO23-00923_155_2_annotated_7180_718_7628_1166.png': 'kras', 'PO23-00923_155_2_annotated_7180_1077_7628_1525.png': 'kras', 'PO23-01592_242_1_annotated_0_3949_448_4397.png': 'zaag', 'PO23-01592_242_1_annotated_359_3949_807_4397.png': 'zaag', 'PO23-01592_242_1_annotated_718_3949_1166_4397.png': 'zaag', 'PO23-01592_242_1_annotated_7180_3949_7628_4397.png': 'zaag', 'PO23-01592_242_1_annotated_0_4122_448_4570.png': 'zaag', 'PO23-01592_242_1_annotated_359_4122_807_4570.png': 'zaag', 'PO23-01592_242_1_annotated_718_4122_1166_4570.png': 'zaag', 'PO23-01592_242_1_annotated_6103_4122_6551_4570.png': 'zaag', 'PO23-01592_242_1_annotated_6462_4122_6910_4570.png': 'zaag', 'PO23-01592_242_1_annotated_6821_4122_7269_4570.png': 'zaag', 'PO23-01592_242_1_annotated_7180_4122_7628_4570.png': 'zaag', 'PO23-01592_242_1_annotated_9668_4122_10116_4570.png': 'zaag', 'PO23-05505_160_2_annotated_1436_0_1884_448.png': 'vlek', 'PO23-05505_160_2_annotated_1795_0_2243_448.png': 'vlek', 'PO23-05505_160_2_annotated_2872_0_3320_448.png': 'kras', 'PO23-05505_160_2_annotated_6103_0_6551_448.png': 'vlek', 'PO23-05505_160_2_annotated_7539_0_7987_448.png': 'veneer piece', 'PO23-05505_160_2_annotated_7898_0_8346_448.png': 'veneer piece', 'PO23-05505_160_2_annotated_2872_359_3320_807.png': 'kras', 'PO23-05505_160_2_annotated_2872_718_3320_1166.png': 'kras', 'PO23-05505_160_2_annotated_2872_1077_3320_1525.png': 'kras', 'PO23-05505_160_2_annotated_2872_1436_3320_1884.png': 'kras', 'PO23-05505_160_2_annotated_5744_1436_6192_1884.png': 'kras', 'PO23-05505_160_2_annotated_2872_1795_3320_2243.png': 'kras', 'PO23-05505_160_2_annotated_5744_1795_6192_2243.png': 'kras', 'PO23-05505_160_2_annotated_2872_2154_3320_2602.png': 'kras', 'PO23-05505_160_2_annotated_2872_2513_3320_2961.png': 'kras', 'PO23-05505_160_2_annotated_2872_2872_3320_3320.png': 'kras', 'PO23-05505_160_2_annotated_2872_3231_3320_3679.png': 'kras', 'PO23-05505_160_2_annotated_2872_3590_3320_4038.png': 'kras', 'PO23-05505_160_2_annotated_2872_3949_3320_4397.png': 'kras', 'PO23-05505_160_2_annotated_1795_4308_2243_4756.png': 'kras', 'PO23-05505_160_2_annotated_2154_4308_2602_4756.png': 'kras', 'PO23-05505_160_2_annotated_2513_4308_2961_4756.png': 'kras', 'PO23-05505_160_2_annotated_2872_4308_3320_4756.png': 'kras', 'PO23-05505_160_2_annotated_1795_4411_2243_4859.png': 'kras', 'PO23-05505_160_2_annotated_2154_4411_2602_4859.png': 'kras', 'PO23-05505_160_2_annotated_2513_4411_2961_4859.png': 'kras', 'PO23-05505_160_2_annotated_2872_4411_3320_4859.png': 'kras', 'PO23-05989_23_2_annotated_6051_0_6499_448.png': 'open fout', 'PO23-05989_23_2_annotated_6051_359_6499_807.png': 'open fout', 'PO23-05989_23_2_annotated_6051_718_6499_1166.png': 'open fout', 'PO23-05989_23_2_annotated_6051_1077_6499_1525.png': 'open fout', 'PO23-05989_23_2_annotated_6051_1436_6499_1884.png': 'open fout', 'PO23-05989_23_2_annotated_6051_1795_6499_2243.png': 'open fout', 'PO23-05989_23_2_annotated_6051_2154_6499_2602.png': 'open fout', 'PO23-05989_23_2_annotated_6051_2513_6499_2961.png': 'open fout', 'PO23-05989_23_2_annotated_6051_2872_6499_3320.png': 'open fout', 'PO23-05989_23_2_annotated_6051_3231_6499_3679.png': 'open fout', 'PO23-05989_23_2_annotated_6051_3590_6499_4038.png': 'open fout', 'PO23-05989_23_2_annotated_1077_4308_1525_4756.png': 'open fout', 'PO23-05989_23_2_annotated_1436_4308_1884_4756.png': 'open fout', 'PO23-05989_23_2_annotated_1795_4308_2243_4756.png': 'open fout', 'PO23-05989_23_2_annotated_2154_4308_2602_4756.png': 'open fout', 'PO23-05989_23_2_annotated_2513_4308_2961_4756.png': 'open fout', 'PO23-05989_23_2_annotated_2872_4308_3320_4756.png': 'open fout', 'PO23-05989_23_2_annotated_3231_4308_3679_4756.png': 'open fout', 'PO23-05989_23_2_annotated_3590_4308_4038_4756.png': 'open fout', 'PO23-05989_23_2_annotated_3949_4308_4397_4756.png': 'open fout', 'PO23-05989_23_2_annotated_4308_4308_4756_4756.png': 'open fout', 'PO23-05989_23_2_annotated_4667_4308_5115_4756.png': 'open fout', 'PO23-05989_23_2_annotated_5026_4308_5474_4756.png': 'open fout', 'PO23-05989_23_2_annotated_5385_4308_5833_4756.png': 'open fout', 'PO23-05989_23_2_annotated_5744_4308_6192_4756.png': 'open fout', 'PO23-05989_23_2_annotated_6051_4308_6499_4756.png': 'open fout', 'PO23-05989_23_2_annotated_0_4376_448_4824.png': 'open fout', 'PO23-05989_23_2_annotated_359_4376_807_4824.png': 'open fout', 'PO23-05989_23_2_annotated_718_4376_1166_4824.png': 'open fout', 'PO23-05989_23_2_annotated_1077_4376_1525_4824.png': 'open fout', 'PO23-05989_23_2_annotated_1436_4376_1884_4824.png': 'open fout', 'PO23-05989_23_2_annotated_1795_4376_2243_4824.png': 'open fout', 'PO23-05989_23_2_annotated_2154_4376_2602_4824.png': 'open fout', 'PO23-05989_23_2_annotated_2513_4376_2961_4824.png': 'open fout', 'PO23-05989_23_2_annotated_2872_4376_3320_4824.png': 'open fout', 'PO23-05989_23_2_annotated_3231_4376_3679_4824.png': 'open fout', 'PO23-05989_23_2_annotated_3590_4376_4038_4824.png': 'open fout', 'PO23-05989_23_2_annotated_3949_4376_4397_4824.png': 'open fout', 'PO23-05989_23_2_annotated_4308_4376_4756_4824.png': 'open fout', 'PO23-05989_23_2_annotated_4667_4376_5115_4824.png': 'open fout', 'PO23-05989_23_2_annotated_5026_4376_5474_4824.png': 'open fout', 'PO23-05989_23_2_annotated_5385_4376_5833_4824.png': 'open fout', 'PO23-05989_23_2_annotated_5744_4376_6192_4824.png': 'open fout', 'PO23-05989_23_2_annotated_6051_4376_6499_4824.png': 'open fout', 'PO23-07206_112_2_annotated_0_0_448_448.png': 'zaag', 'PO23-07206_112_2_annotated_359_0_807_448.png': 'zaag', 'PO23-07206_112_2_annotated_718_0_1166_448.png': 'zaag', 'PO23-07206_112_2_annotated_1077_0_1525_448.png': 'zaag', 'PO23-07206_112_2_annotated_1436_0_1884_448.png': 'zaag', 'PO23-07206_112_2_annotated_1795_0_2243_448.png': 'zaag', 'PO23-07206_112_2_annotated_2154_0_2602_448.png': 'zaag', 'PO23-07206_112_2_annotated_2513_0_2961_448.png': 'zaag', 'PO23-07206_112_2_annotated_2872_0_3320_448.png': 'zaag', 'PO23-07206_112_2_annotated_3231_0_3679_448.png': 'zaag', 'PO23-07206_112_2_annotated_3590_0_4038_448.png': 'zaag', 'PO23-07206_112_2_annotated_3949_0_4397_448.png': 'zaag', 'PO23-07206_112_2_annotated_4308_0_4756_448.png': 'zaag', 'PO23-07206_112_2_annotated_4667_0_5115_448.png': 'zaag', 'PO23-07206_112_2_annotated_5026_0_5474_448.png': 'zaag', 'PO23-07206_112_2_annotated_5385_0_5833_448.png': 'zaag', 'PO23-07206_112_2_annotated_5744_0_6192_448.png': 'zaag', 'PO23-07206_112_2_annotated_7767_5744_8215_6192.png': 'deuk', 'PO23-07206_112_2_annotated_7767_6103_8215_6551.png': 'deuk', 'PO23-07371_12_1_annotated_0_0_448_448.png': 'open fout', 'PO23-07371_12_1_annotated_0_359_448_807.png': 'open fout', 'PO23-07371_12_1_annotated_0_718_448_1166.png': 'open fout', 'PO23-07371_12_1_annotated_0_1077_448_1525.png': 'open fout', 'PO23-07371_12_1_annotated_0_1436_448_1884.png': 'open fout', 'PO23-07371_12_1_annotated_0_1795_448_2243.png': 'open fout', 'PO23-07371_12_1_annotated_0_2154_448_2602.png': 'open fout', 'PO23-07371_12_1_annotated_0_2513_448_2961.png': 'open fout', 'PO23-07371_12_1_annotated_0_2872_448_3320.png': 'open fout', 'PO23-07371_12_1_annotated_0_3231_448_3679.png': 'open fout', 'PO23-07371_12_1_annotated_0_3590_448_4038.png': 'open fout', 'PO23-07371_12_1_annotated_0_3949_448_4397.png': 'open fout', 'PO23-07371_12_1_annotated_0_4217_448_4665.png': 'open fout', 'PO23-07371_12_1_annotated_359_4217_807_4665.png': 'open fout', 'PO23-07371_12_1_annotated_718_4217_1166_4665.png': 'open fout', 'PO23-07371_12_1_annotated_1077_4217_1525_4665.png': 'open fout', 'PO23-07371_12_1_annotated_1436_4217_1884_4665.png': 'open fout', 'PO23-07371_12_1_annotated_1795_4217_2243_4665.png': 'open fout', 'PO23-07371_12_1_annotated_2154_4217_2602_4665.png': 'open fout', 'PO23-07371_12_1_annotated_2513_4217_2961_4665.png': 'open fout', 'PO23-07371_12_1_annotated_2872_4217_3320_4665.png': 'open fout', 'PO23-07371_12_1_annotated_3231_4217_3679_4665.png': 'open fout', 'PO23-07371_12_1_annotated_3590_4217_4038_4665.png': 'open fout', 'PO23-07371_12_1_annotated_3949_4217_4397_4665.png': 'open fout', 'PO23-07684_45_2_annotated_6103_0_6551_448.png': 'open knop', 'PO23-07684_45_2_annotated_6103_718_6551_1166.png': 'open knop', 'PO23-07684_45_2_annotated_3949_1436_4397_1884.png': 'barst', 'PO23-07684_45_2_annotated_4667_1436_5115_1884.png': 'kras', 'PO23-07684_45_2_annotated_5026_1436_5474_1884.png': 'kras', 'PO23-07684_45_2_annotated_6103_1436_6551_1884.png': 'open knop', 'PO23-07684_45_2_annotated_3949_1795_4397_2243.png': 'barst', 'PO23-07684_45_2_annotated_4308_1795_4756_2243.png': 'barst', 'PO23-07684_45_2_annotated_4667_1795_5115_2243.png': 'kras', 'PO23-07684_45_2_annotated_5026_1795_5474_2243.png': 'kras', 'PO23-07684_45_2_annotated_5385_1795_5833_2243.png': 'barst', 'PO23-07684_45_2_annotated_6103_1795_6551_2243.png': 'open knop', 'PO23-07684_45_2_annotated_6594_1795_7042_2243.png': 'veneer piece', 'PO23-07684_45_2_annotated_3949_2154_4397_2602.png': 'kras', 'PO23-07684_45_2_annotated_4308_2154_4756_2602.png': 'kras', 'PO23-07684_45_2_annotated_4667_2154_5115_2602.png': 'barst', 'PO23-07684_45_2_annotated_5026_2154_5474_2602.png': 'barst', 'PO23-07684_45_2_annotated_5385_2154_5833_2602.png': 'barst', 'PO23-07684_45_2_annotated_6103_2154_6551_2602.png': 'open knop', 'PO23-07684_45_2_annotated_6594_2154_7042_2602.png': 'veneer piece', 'PO23-07684_45_2_annotated_6103_2513_6551_2961.png': 'open knop', 'PO23-07684_45_2_annotated_6103_2872_6551_3320.png': 'open knop', 'PO23-07684_45_2_annotated_6103_3231_6551_3679.png': 'open knop', 'PO23-07919_18_1_annotated_5026_359_5474_807.png': 'snijfout', 'PO23-07919_18_1_annotated_5385_359_5833_807.png': 'snijfout', 'PO23-07919_18_1_annotated_5026_718_5474_1166.png': 'snijfout', 'PO23-07919_18_1_annotated_5385_718_5833_1166.png': 'snijfout', 'PO23-07919_18_1_annotated_5385_1077_5833_1525.png': 'snijfout', 'PO23-07919_18_1_annotated_3231_1795_3679_2243.png': 'snijfout', 'PO23-07919_18_1_annotated_6103_1795_6551_2243.png': 'snijfout', 'PO23-07919_18_1_annotated_3231_2154_3679_2602.png': 'snijfout', 'PO23-07919_18_1_annotated_3590_2154_4038_2602.png': 'snijfout', 'PO23-07919_18_1_annotated_6103_2154_6551_2602.png': 'snijfout', 'PO23-07919_18_1_annotated_2872_3590_3320_4038.png': 'snijfout', 'PO23-08548_1_2_annotated_0_0_448_448.png': 'zaag', 'PO23-08548_1_2_annotated_0_359_448_807.png': 'zaag', 'PO23-08548_1_2_annotated_0_718_448_1166.png': 'zaag', 'PO23-08548_1_2_annotated_0_1077_448_1525.png': 'zaag', 'PO23-08548_1_2_annotated_0_1436_448_1884.png': 'zaag', 'PO23-08548_1_2_annotated_0_1795_448_2243.png': 'zaag', 'PO23-08548_1_2_annotated_0_2154_448_2602.png': 'zaag', 'PO23-08548_1_2_annotated_0_2513_448_2961.png': 'zaag', 'PO23-08548_1_2_annotated_0_2872_448_3320.png': 'zaag', 'PO23-08548_1_2_annotated_0_3231_448_3679.png': 'zaag', 'PO23-08548_1_2_annotated_0_3590_448_4038.png': 'zaag', 'PO23-08548_1_2_annotated_0_3949_448_4397.png': 'zaag', 'PO23-08548_1_2_annotated_0_4072_448_4520.png': 'zaag', 'PO23-09456_6_1_annotated_7539_5026_7987_5474.png': 'vlek', 'PO23-09561_46_1_annotated_0_7180_448_7628.png': 'deuk', 'PO23-09561_46_1_annotated_0_7242_448_7690.png': 'deuk', 'PO23-10367_9_1_annotated_8975_0_9423_448.png': 'barst', 'PO23-10367_9_1_annotated_9107_0_9555_448.png': 'barst', 'PO23-10367_9_1_annotated_5744_359_6192_807.png': 'barst', 'PO23-10367_9_1_annotated_6103_359_6551_807.png': 'barst', 'PO23-10367_9_1_annotated_6462_359_6910_807.png': 'barst', 'PO23-10367_9_1_annotated_6821_359_7269_807.png': 'barst', 'PO23-10367_9_1_annotated_7180_359_7628_807.png': 'barst', 'PO23-10367_9_1_annotated_7539_359_7987_807.png': 'barst', 'PO23-10367_9_1_annotated_7898_359_8346_807.png': 'barst', 'PO23-10367_9_1_annotated_8257_359_8705_807.png': 'barst', 'PO23-10367_9_1_annotated_8616_359_9064_807.png': 'barst', 'PO23-10367_9_1_annotated_8975_359_9423_807.png': 'barst', 'PO23-10367_9_1_annotated_9107_359_9555_807.png': 'barst', 'PO23-12572_3_2_annotated_8975_1436_9423_1884.png': 'open voeg', 'PO23-12572_3_2_annotated_9334_1436_9782_1884.png': 'open voeg', 'PO23-12572_3_2_annotated_9675_1436_10123_1884.png': 'open voeg', 'PO23-12572_3_2_annotated_0_3231_448_3679.png': 'open voeg', 'PO23-12572_3_2_annotated_0_3590_448_4038.png': 'open voeg', 'PO23-12572_3_2_annotated_0_3949_448_4397.png': 'open voeg', 'PO23-12572_3_2_annotated_0_4308_448_4756.png': 'open voeg', 'PO23-12884_2_1_annotated_9111_0_9559_448.png': 'zaag', 'PO23-12884_2_1_annotated_9111_359_9559_807.png': 'zaag', 'PO23-12884_2_1_annotated_9111_718_9559_1166.png': 'zaag', 'PO23-12884_2_1_annotated_9111_1077_9559_1525.png': 'zaag', 'PO23-12884_2_1_annotated_9111_1436_9559_1884.png': 'zaag', 'PO23-12884_2_1_annotated_9111_1795_9559_2243.png': 'zaag', 'PO23-12884_2_1_annotated_9111_2154_9559_2602.png': 'zaag', 'PO23-12884_2_1_annotated_9111_2513_9559_2961.png': 'zaag', 'PO23-12884_2_1_annotated_9111_2872_9559_3320.png': 'zaag', 'PO23-12884_2_1_annotated_9111_3231_9559_3679.png': 'zaag', 'PO23-12884_2_1_annotated_9111_3590_9559_4038.png': 'zaag', 'PO23-12884_2_1_annotated_9111_3949_9559_4397.png': 'zaag', 'PO23-13050_2_2_annotated_0_0_448_448.png': 'open fout', 'PO23-13050_2_2_annotated_0_359_448_807.png': 'open fout', 'PO23-13050_2_2_annotated_0_718_448_1166.png': 'open fout', 'PO23-13050_2_2_annotated_0_1077_448_1525.png': 'open fout', 'PO23-13050_2_2_annotated_0_1436_448_1884.png': 'open fout', 'PO23-13050_2_2_annotated_0_1795_448_2243.png': 'open fout', 'PO23-13050_2_2_annotated_0_2154_448_2602.png': 'open fout', 'PO23-13050_2_2_annotated_0_2513_448_2961.png': 'open fout', 'PO23-13050_2_2_annotated_0_2872_448_3320.png': 'open fout', 'PO23-13050_2_2_annotated_0_3231_448_3679.png': 'open fout', 'PO23-13050_2_2_annotated_0_3590_448_4038.png': 'open fout', 'PO23-13050_2_2_annotated_0_3949_448_4397.png': 'open fout', 'PO23-13050_2_2_annotated_0_4308_448_4756.png': 'open fout', 'PO23-13050_2_2_annotated_0_4351_448_4799.png': 'open fout', 'PO23-13713_4_2_annotated_0_0_448_448.png': 'open knop', 'PO23-13713_4_2_annotated_6462_0_6910_448.png': 'zaag', 'PO23-13713_4_2_annotated_7180_0_7628_448.png': 'zaag', 'PO23-13713_4_2_annotated_7539_0_7987_448.png': 'zaag', 'PO23-13713_4_2_annotated_7609_0_8057_448.png': 'zaag', 'PO23-13713_4_2_annotated_0_359_448_807.png': 'open knop', 'PO23-13713_4_2_annotated_0_718_448_1166.png': 'open knop', 'PO23-13713_4_2_annotated_0_1077_448_1525.png': 'open knop', 'PO23-13713_4_2_annotated_0_3949_448_4397.png': 'zaag', 'PO23-13713_4_2_annotated_0_4251_448_4699.png': 'zaag', 'PO23-17097_4_2_annotated_8257_7180_8705_7628.png': 'deuk', 'PO23-17097_4_2_annotated_8338_7180_8786_7628.png': 'deuk', 'PO23-17097_4_2_annotated_8257_7461_8705_7909.png': 'deuk', 'PO23-17097_4_2_annotated_8338_7461_8786_7909.png': 'deuk', 'PO23-18372_18_2_annotated_8616_7456_9064_7904.png': 'deuk', 'PO23-18372_18_2_annotated_8642_7456_9090_7904.png': 'deuk', 'PO23-20489_42_2_annotated_11129_1795_11577_2243.png': 'veneer piece', 'PO23-20489_42_2_annotated_11339_1795_11787_2243.png': 'veneer piece', 'PO23-20489_42_2_annotated_10770_2154_11218_2602.png': 'veneer piece', 'PO23-20489_42_2_annotated_11129_2154_11577_2602.png': 'veneer piece', 'PO23-20489_42_2_annotated_11339_2154_11787_2602.png': 'veneer piece', 'PO23-20951_1_1_annotated_9110_0_9558_448.png': 'zaag', 'PO23-20951_1_1_annotated_9110_359_9558_807.png': 'zaag', 'PO23-20951_1_1_annotated_9110_718_9558_1166.png': 'zaag', 'PO23-20951_1_1_annotated_9110_1077_9558_1525.png': 'zaag', 'PO23-20951_1_1_annotated_9110_1436_9558_1884.png': 'zaag', 'PO23-20951_1_1_annotated_9110_1795_9558_2243.png': 'zaag', 'PO23-20951_1_1_annotated_9110_2154_9558_2602.png': 'zaag', 'PO23-20951_1_1_annotated_9110_2513_9558_2961.png': 'zaag', 'PO23-20951_1_1_annotated_9110_2872_9558_3320.png': 'zaag', 'PO23-20951_1_1_annotated_9110_3231_9558_3679.png': 'zaag', 'PO23-20951_1_1_annotated_9110_3590_9558_4038.png': 'zaag', 'PO23-20951_1_1_annotated_9110_3949_9558_4397.png': 'zaag', 'PO23-20951_1_1_annotated_9110_4087_9558_4535.png': 'zaag', 'PO23-21526_9_2_annotated_2872_0_3320_448.png': 'deuk', 'PO23-21526_9_2_annotated_3231_0_3679_448.png': 'deuk', 'PO23-21526_9_2_annotated_7539_0_7987_448.png': 'zaag', 'PO23-21526_9_2_annotated_7898_0_8346_448.png': 'zaag', 'PO23-21526_9_2_annotated_8257_0_8705_448.png': 'zaag', 'PO23-21526_9_2_annotated_8616_0_9064_448.png': 'zaag', 'PO23-21526_9_2_annotated_8975_0_9423_448.png': 'zaag', 'PO23-21526_9_2_annotated_9334_0_9782_448.png': 'zaag', 'PO23-21526_9_2_annotated_9693_0_10141_448.png': 'zaag', 'PO23-21526_9_2_annotated_10052_0_10500_448.png': 'zaag', 'PO23-21526_9_2_annotated_10355_0_10803_448.png': 'zaag', 'PO23-21526_9_2_annotated_4667_718_5115_1166.png': 'vlek', 'PO23-21526_9_2_annotated_5026_718_5474_1166.png': 'vlek', 'PO23-21526_9_2_annotated_10355_7180_10803_7628.png': 'deuk', 'PO23-21526_9_2_annotated_10052_7416_10500_7864.png': 'deuk', 'PO23-21526_9_2_annotated_10355_7416_10803_7864.png': 'deuk', 'PO23-24021_50_1_annotated_359_3949_807_4397.png': 'vlek', 'PO23-24021_50_1_annotated_718_3949_1166_4397.png': 'vlek', 'PO23-24021_50_1_annotated_1077_3949_1525_4397.png': 'vlek', 'PO23-24021_50_1_annotated_1795_3949_2243_4397.png': 'vlek', 'PO23-24021_50_1_annotated_2154_3949_2602_4397.png': 'vlek', 'PO23-24021_50_1_annotated_3590_3949_4038_4397.png': 'vlek', 'PO23-24021_50_1_annotated_5026_3949_5474_4397.png': 'vlek', 'PO23-24021_50_1_annotated_5385_3949_5833_4397.png': 'vlek', 'PO23-24021_50_1_annotated_6462_3949_6910_4397.png': 'vlek', 'PO23-24021_50_1_annotated_6821_3949_7269_4397.png': 'vlek', 'PO23-24021_50_1_annotated_7898_3949_8346_4397.png': 'vlek', 'PO23-24021_50_1_annotated_8257_3949_8705_4397.png': 'vlek', 'PO23-24021_50_1_annotated_9693_3949_10141_4397.png': 'vlek', 'PO23-24021_50_1_annotated_359_4042_807_4490.png': 'vlek', 'PO23-24021_50_1_annotated_718_4042_1166_4490.png': 'vlek', 'PO23-24021_50_1_annotated_1077_4042_1525_4490.png': 'vlek', 'PO23-24021_50_1_annotated_1795_4042_2243_4490.png': 'vlek', 'PO23-24021_50_1_annotated_2154_4042_2602_4490.png': 'vlek', 'PO23-24021_50_1_annotated_3590_4042_4038_4490.png': 'vlek', 'PO23-24021_50_1_annotated_5026_4042_5474_4490.png': 'vlek', 'PO23-24021_50_1_annotated_5385_4042_5833_4490.png': 'vlek', 'PO23-24021_50_1_annotated_6462_4042_6910_4490.png': 'vlek', 'PO23-24021_50_1_annotated_6821_4042_7269_4490.png': 'vlek', 'PO23-24021_50_1_annotated_7898_4042_8346_4490.png': 'vlek', 'PO23-24021_50_1_annotated_8257_4042_8705_4490.png': 'vlek', 'PO23-24021_50_1_annotated_9693_4042_10141_4490.png': 'vlek'}

Test Accuracy: 0.9861

Test Recall: 0.6381

Test Precision: 0.7880

# Confusion matrix

# Initialize the confusion matrix

data = confusion_matrix_dict

categories = list(data.keys())

confusion_matrix = []

# Populate the confusion matrix

for category in categories:

row = [data[category]["guess_bg"], data[category]["guess_fault"]]

confusion_matrix.append(row)

# Print the confusion matrix

print(f"{'':>12} {'Background':>10} {'Fault':>5}")

for i, category in enumerate(categories):

print(f"{category:>12}: {confusion_matrix[i][0]:>10} {confusion_matrix[i][1]:>5}")

Background Fault

open fout: 25 124

open voeg: 5 16

deuk: 1 14

zaag: 50 79

barst: 10 10

open knop: 9 3

snijfout: 8 3

veneer piece: 2 7

vlek: 30 2

ongekend: 2 0

kras: 14 17

bg: 16076 74

Cut-out Method#

This section describes the generation process for the cut-out method. In this approach, faults are isolated from the original input images. The same training code as mentioned previously should then be used for further processing.

# Directory paths

main_directory = "cutout_folders"

cutout_directory = main_directory + "/cutout"

train = "train"

validate = "validate"

test = "test"

dataset = main_directory + "/dataset"

# Create dataset & cutout folders for each class

for i in classes:

for y in [train, test, validate]:

os.makedirs(dataset + "/" + y + "/" + i, exist_ok=True)

os.makedirs(cutout_directory + "/" + y + "/" + i, exist_ok=True)

# Create a dictionary to map category IDs to category names

category_dict = {}

for cat in coco_data['categories']:

id = cat['id']

name = cat['name']

category_dict[id] = name.replace(" ", "_")

print(category_dict)

# Minimum dimension for images

min_dim = size

# Dictionary to store the fault category for each isolated fault in an image

image_fault_dic = {}

# Loop over all images in the dataset

for image in tqdm(coco_data['images'], desc='Processing images'):

# Get the file name and its name without extension

filename = image['file_name']

filename_without_extension = filename.replace(".jpg", "")

# Load the image

file = cv2.imread(dataset_directory + "/" + filename)

height, width = file.shape[:2]

# Initialize exclusion zones list

exclusion_zones = []

if True:

# Get annotations for the current image

bbox_array = []

fault_array = []

for annotation in coco_data['annotations']:

if image['id'] == annotation['image_id']:

bbox_array.append(annotation['bbox'])

fault_array.append(category_dict[annotation['category_id']])

# Cut out faults (bounding boxes) with adjusted dimensions

for index, bbox in enumerate(bbox_array):

x, y, w, h = bbox

x, y, w, h = int(x), int(y), int(w), int(h)

# Calculate the required extra width and height to meet the minimum dimensions

extra_width = max(0, min_dim - w)

extra_height = max(0, min_dim - h)

extra_left = extra_width // 2

extra_right = extra_width - extra_left

extra_above = extra_height // 2

extra_below = extra_height - extra_above

# Add padding and adjust bounding box so it doesn't go beyond image boundaries

x_start = max(0, x - extra_left)

y_start = max(0, y - extra_above)

x_end = min(width, x + w + extra_right)

y_end = min(height, y + h + extra_below)

# Further adjust if still below minimum width or height

if (x_end - x_start) < min_dim:

if x_end == width: # If we hit the right edge, add more to the left

x_start = max(0, x_end - min_dim)

else: # If we hit the left edge, add more to the right

x_end = min(width, x_start + min_dim)

if (y_end - y_start) < min_dim:

if y_end == height: # If we hit the bottom edge, add more to the top

y_start = max(0, y_end - min_dim)

else: # If we hit the top edge, add more to the bottom

y_end = min(height, y_start + min_dim)

exclusion_zones.append((x_start, y_start, x_end, y_end))

# Get the fault name

fault_name = fault_array[index]

if fault_name in keepers:

# Create a new file name for the cropped image

new_file_name = f"{filename_without_extension}_window_{x}_{y}_{fault_name}"

# Add to the image_fault_dic

image_fault_dic[new_file_name] = fault_name

# Crop the image and save it

cropped_image = file[y_start:y_end, x_start:x_end]

save_path = f"{cutout_directory}/{split_dict[filename]}/{classes[1]}/{new_file_name}.jpg"

cv2.imwrite(save_path, cropped_image)

# Apply black pixels to the exclusion zones in the original image

for zone in exclusion_zones:

x_start, y_start, x_end, y_end = zone

file[y_start:y_end, x_start:x_end] = 0 # Set the pixels in the zone to black

# Save the modified original image

save_path = f"{cutout_directory}/{split_dict[filename]}/{classes[0]}/{filename_without_extension}.jpg"

cv2.imwrite(save_path, file)